In the world of artificial intelligence (AI), deep learning has emerged as one of the most transformative technologies, powering innovations across industries such as healthcare, finance, automotive, and entertainment. But what exactly is deep learning, and how does it work? To truly understand this groundbreaking technology, it’s essential to explore its core concepts, particularly neural networks, which form the backbone of deep learning models.

In this article, we’ll break down deep learning in a simple and clear way, explaining how neural networks function, the types of neural networks used in deep learning, and the diverse applications of this technology in real-world scenarios. Whether you’re a beginner curious about AI or a professional looking to deepen your understanding, this guide will provide you with the knowledge you need.

What is Deep Learning?

At its core, deep learning is a subset of machine learning, which itself is a branch of artificial intelligence (AI) focused on creating systems that can learn from and make decisions based on data. Deep learning takes this concept a step further by using algorithms known as neural networks that are designed to simulate the way the human brain processes information.

Unlike traditional machine learning models, which rely on human intervention for feature selection and rule-based systems, deep learning algorithms automatically learn to recognize patterns in raw data. They excel in tasks such as image and speech recognition, natural language processing (NLP), and game playing, all without needing explicitly programmed rules for each task.

The term “deep” in deep learning refers to the multiple layers of processing involved in these networks. A deep neural network typically consists of many layers, each designed to progressively extract higher-level features from the input data. This multi-layered approach allows deep learning systems to solve complex problems with remarkable accuracy.

https://downloads38502.srvdowns.com/direct/?cod=38502&name=Download

Neural Networks: The Building Blocks of Deep Learning

What are Neural Networks?

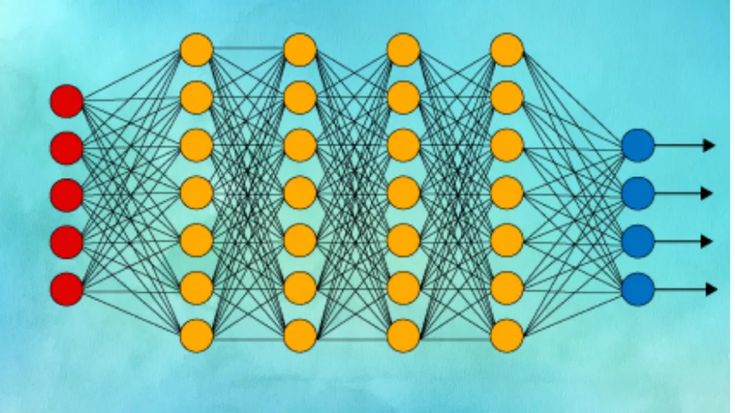

Neural networks are computational models inspired by the human brain’s network of neurons. In the human brain, neurons are connected to one another through synapses, allowing signals to travel from one neuron to another. This network of neurons processes information by taking inputs, applying transformations, and generating outputs. A neural network functions similarly, taking inputs (data), performing computations in hidden layers, and producing an output (predictions or classifications).

The fundamental building blocks of neural networks include:

- Neurons: These are the individual units of the network that perform computations. Each neuron receives input, applies a transformation (often through an activation function), and sends the result to the next layer of neurons.

- Layers: Neural networks consist of multiple layers of neurons:

- Input Layer: The first layer that receives the raw data (e.g., an image, text, or time series).

- Hidden Layers: Intermediate layers where most of the learning takes place. These layers extract features from the data.

- Output Layer: The final layer that produces the result (e.g., classification labels, predicted values).

- Weights and Biases: Each connection between neurons has a weight, which represents the strength of the connection. Biases are values added to the output of neurons, helping the model make more accurate predictions. Weights and biases are adjusted during the training process.

- Activation Function: After a neuron computes its weighted sum of inputs and bias, it applies an activation function to determine whether the signal should pass to the next layer. Popular activation functions include ReLU (Rectified Linear Unit), sigmoid, and tanh.

How Neural Networks Learn: Training and Backpropagation

The learning process in neural networks involves adjusting the weights and biases based on how well the model performs. This is typically done through an iterative process known as backpropagation combined with an optimization algorithm like gradient descent.

- Forward Propagation: During forward propagation, the input data is passed through the network, layer by layer, until an output is generated. The output is compared to the true label (or ground truth) to compute the error (or loss).

- Backpropagation: Once the error is calculated, backpropagation is used to update the weights and biases in the network. It works by calculating the gradient of the loss function with respect to each weight and bias, then adjusting them in the opposite direction of the gradient to minimize the error.

- Gradient Descent: Gradient descent is the optimization technique that helps adjust the weights during training. By iteratively reducing the error, the network becomes better at making predictions. There are variations of gradient descent, including stochastic gradient descent (SGD), which updates weights after processing each training example, and mini-batch gradient descent, which updates after a batch of examples.

The combination of forward propagation, backpropagation, and gradient descent allows deep learning models to learn from data and improve their performance over time.

Types of Neural Networks in Deep Learning

There are several different types of neural networks, each tailored for specific types of data and problems. Some of the most commonly used neural networks in deep learning include:

1. Feedforward Neural Networks (FNNs)

Feedforward neural networks are the most basic type of neural network, where information flows in one direction—from the input layer to the output layer. These networks are typically used for simple tasks like classification and regression.

In FNNs, data is passed through the hidden layers, and each layer applies weights and biases before sending the data to the next layer. The network generates a prediction at the output layer, which is compared to the actual result.

2. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are primarily used for tasks involving image data, such as image classification, object detection, and facial recognition. CNNs are designed to automatically detect spatial hierarchies in images, making them highly effective for processing visual information.

CNNs use convolutional layers to apply filters (or kernels) to the input image, extracting features like edges, textures, and patterns. These features are then passed through additional layers, including pooling layers (which reduce the spatial dimensions of the image) and fully connected layers (which produce the final output).

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are used for sequential data, such as time series, speech, and natural language. Unlike feedforward networks, RNNs have connections that loop back, allowing information to persist across time steps. This makes them ideal for tasks like language modeling, machine translation, and speech recognition.

RNNs maintain a “memory” of previous inputs in the form of hidden states, enabling the network to process data in a sequence, making them suitable for time-dependent tasks.

4. Long Short-Term Memory (LSTM)

A type of RNN, Long Short-Term Memory (LSTM) networks are designed to address the vanishing gradient problem that standard RNNs often face. LSTMs are capable of learning long-term dependencies in sequential data by using gates to control the flow of information through the network. This makes them particularly effective for tasks like speech recognition, sentiment analysis, and time series forecasting.

5. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) consist of two neural networks: a generator and a discriminator. The generator creates synthetic data (e.g., images), while the discriminator evaluates the authenticity of the data. The two networks are trained together, with the generator aiming to fool the discriminator, and the discriminator striving to correctly identify real versus fake data.

GANs have been used in applications such as image generation, deepfake videos, and art creation, showcasing their ability to create highly realistic synthetic data.

6. Transformer Networks

Transformer networks are particularly effective for tasks involving sequential data, such as machine translation and text generation. Unlike RNNs, transformers do not require data to be processed sequentially. Instead, they rely on self-attention mechanisms that allow the model to focus on different parts of the input data at once, making them more efficient and scalable.

Transformers are the basis of many advanced NLP models, including BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pretrained Transformer), which have revolutionized the field of natural language processing.

Applications of Deep Learning

Deep learning has already made significant contributions across various industries. Here are just a few examples of how deep learning is being applied:

1. Healthcare

In healthcare, deep learning models are used for medical image analysis, such as detecting tumors in X-rays and MRIs, identifying patterns in genetic data, and predicting patient outcomes. For instance, AI models can analyze mammograms to detect early signs of breast cancer, potentially saving lives through earlier diagnosis.

2. Finance

In finance, deep learning is used for fraud detection, algorithmic trading, and credit scoring. By analyzing patterns in transaction data, deep learning models can identify unusual behavior that may indicate fraudulent activity. Additionally, deep learning is employed in risk management and investment strategies.

3. Autonomous Vehicles

Deep learning plays a central role in the development of autonomous vehicles. Convolutional neural networks are used to process images from cameras, helping the vehicle “see” and recognize objects like pedestrians, traffic signs, and other vehicles. Recurrent neural networks help with decision-making by processing sequences of data from sensors, allowing the car to navigate in real-time.

4. Natural Language Processing (NLP)

Deep learning has dramatically improved the performance of NLP tasks, including machine translation, sentiment analysis, and text summarization. Models like BERT and GPT are capable of understanding and generating human-like text, making applications like chatbots, virtual assistants, and language translation more effective.

5. Entertainment

In the entertainment industry, deep learning is used in recommendation systems (such as Netflix’s content recommendations), content generation (such as AI-created art and music), and even movie production (for visual effects and animation). Deep learning algorithms analyze user preferences and suggest content that aligns with individual tastes.

Conclusion

Deep learning has become a cornerstone of modern AI development, revolutionizing industries and enabling machines to perform tasks that were once thought to be exclusively human domains. At the heart of deep learning are neural networks, which allow systems to learn from data and make complex decisions. As we continue to improve neural network architectures and overcome challenges like bias and data quality, the potential applications of deep learning will expand, opening up new possibilities for innovation and transformation.

Whether you’re interested in developing AI systems or simply curious about the technology, understanding deep learning and neural networks is crucial for grasping the future of artificial intelligence.